在開始主題之前,先簡單介紹一下 AWS Fargate。在 AWS EC2 服務,我們可以隨意啟用 EC2 instance 作為我們的主機來使用,而在 ECS 或是 EKS 服務上,則可以直接部署容器服務,如 ECS Service/Task 或是 EKS Deployment/Pod 到 AWS 託管的主機上。換言之,對於使用者可以更減少維護主機的成本,取而代之則是依照 vCPU、memory、作業系統、CPU 架構及儲存大小等五個維度 計價 [1]。

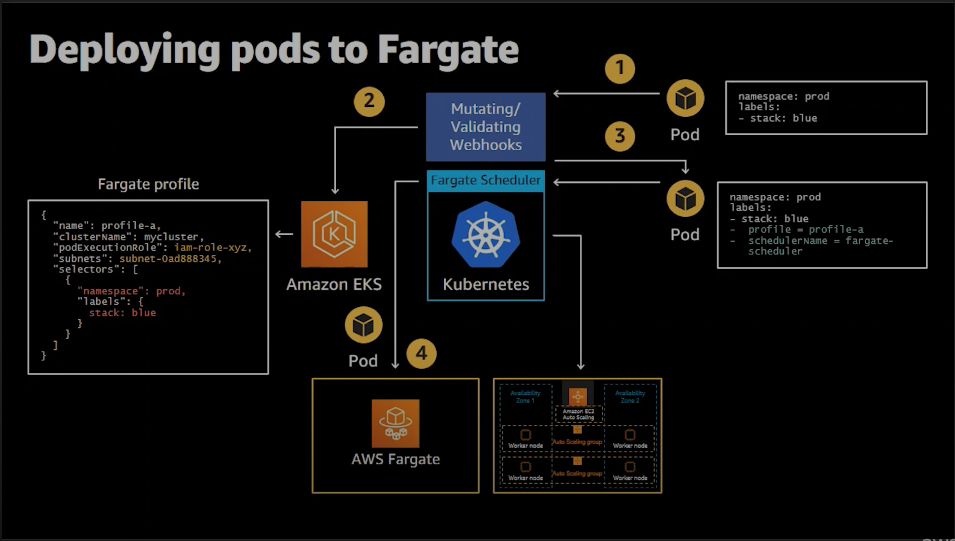

在 EKS 服務,我們則可以透過建立 Fargate Profile 方式指定 namespace 及相應 label 後[2],EKS 則可以整合 AWS Fargate 節點而無需手動管理節點。故本文將探討「為什麼 EKS cluster 可以部署至 Fargate 而非透過原生 Kubernetes 方式部署至 EKS worker node 」,希望理解 EKS 是如何更新 Pod 調度過程。

fargate-ns。$ eksctl create fargateprofile \

--cluster ironman-2022 \

--name demo-profile \

--namespace fargate-ns

2022-09-20 20:08:51 [ℹ] deploying stack "eksctl-ironman-2022-fargate"

2022-09-20 20:08:51 [ℹ] waiting for CloudFormation stack "eksctl-ironman-2022-fargate"

2022-09-20 20:09:21 [ℹ] waiting for CloudFormation stack "eksctl-ironman-2022-fargate"

2022-09-20 20:09:21 [ℹ] creating Fargate profile "demo-profile" on EKS cluster "ironman-2022"

2022-09-20 20:11:31 [ℹ] created Fargate profile "demo-profile" on EKS cluster "ironman-2022"

$ kubectl create ns fargate-ns

$ kubectl run nginx --image=nginx -n fargate-ns

$ kubectl run nginx --image=nginx -n default

$ kubectl -n default get po nginx -o yaml > default-nginx.yaml

$ kubectl -n fargate-ns get po nginx -o yaml > fargate-nginx.yaml

透過 diff 命令比對,以下僅列舉較大差異部分,其他如 timestamp 或是 namespace 不同處則省略。

$ diff fargate-nginx.yaml default-nginx.yaml

5,6d4

< CapacityProvisioned: 0.25vCPU 0.5GB

< Logging: 'LoggingDisabled: LOGGING_CONFIGMAP_NOT_FOUND'

...

...

10d7

< eks.amazonaws.com/fargate-profile: demo-profile

...

...

...

30c27

< nodeName: fargate-ip-192-168-185-38.eu-west-1.compute.internal

---

> nodeName: ip-192-168-65-212.eu-west-1.compute.internal

32,33c29

< priority: 2000001000

< priorityClassName: system-node-critical

---

> priority: 0

35c31

< schedulerName: fargate-scheduler

---

> schedulerName: default-scheduler

...

...

...

可以發現到部署至 Fargate Pod 不同處有以下幾個

接續,我們使用以下 CloudWatch Log Insights Syntax 查看 audit log 觀察 API server 是否有針對此 Pod 進行更新,整理如下時間軸及摘要重要資訊:

filter @logStream like /^kube-apiserver-audit/

| fields @timestamp, @message

| sort @timestamp asc

| filter objectRef.name == 'nginx' AND objectRef.resource == 'pods' AND verb != 'get'

| limit 10000

kubectl 建立此 Pod,其中經過 3 個 Mutating webhook,而被 Mutate webhook 0500-amazon-eks-fargate-mutation.amazonaws.com 更新 schedulerName 為 fargate-scheduler,以及上述 3 個 annotations:

annotations.mutation.webhook.admission.k8s.io/round_0_index_0: {"configuration":"0500-amazon-eks-fargate-mutation.amazonaws.com","webhook":"0500-amazon-eks-fargate-mutation.amazonaws.com","mutated":true}

annotations.mutation.webhook.admission.k8s.io/round_0_index_2: {"configuration":"pod-identity-webhook","webhook":"iam-for-pods.amazonaws.com","mutated":false}

annotations.mutation.webhook.admission.k8s.io/round_0_index_3: {"configuration":"vpc-resource-mutating-webhook","webhook":"mpod.vpc.k8s.aws","mutated":false}

annotations.patch.webhook.admission.k8s.io/round_0_index_0: {"configuration":"0500-amazon-eks-fargate-mutation.amazonaws.com","webhook":"0500-amazon-eks-fargate-mutation.amazonaws.com","patch":[{"op":"add","path":"/spec/schedulerName","value":"fargate-scheduler"},{"op":"add","path":"/spec/priorityClassName","value":"system-node-critical"},{"op":"add","path":"/spec/priority","value":2000001000},{"op":"add","path":"/metadata/labels","value":{"eks.amazonaws.com/fargate-profile":"demo-profile","run":"nginx"}}],"patchType":"JSONPatch"}

requestObject.spec.schedulerName: default-scheduler

responseObject.spec.schedulerName: fargate-scheduler

由 username eks:fargate-scheduler 更新 request 先前所提及的 Mutate webhook 0500-amazon-eks-fargate-mutation.amazonaws.com 內容。

user.username: eks:fargate-scheduler

verb: update

requestObject.spec.schedulerName: fargate-scheduler

requestObject.metadata.labels.eks.amazonaws.com/fargate-profile: demo-profile

requestObject.metadata.annotations.CapacityProvisioned: 0.25vCPU 0.5GB

requestObject.metadata.annotations.Logging: LoggingDisabled: LOGGING_CONFIGMAP_NOT_FOUND

由 username eks:fargate-scheduler 建立 Nginx Pod 及 Fargate Node fargate-ip-192-168-185-38.eu-west-1.compute.internal 綁定。

user.username: eks:fargate-scheduler

verb: create

requestObject.kind: Binding

requestObject.target.name: fargate-ip-192-168-185-38.eu-west-1.compute.internal

此兩個 patch 皆是由 kubelet 更新 Pod condition 狀態最終至 Ready

user.username: system:node:fargate-ip-192-168-185-38.eu-west-1.compute.internal

userAgent: kubelet/v1.22.6 (linux/amd64) kubernetes/35f06c9

verb: patch

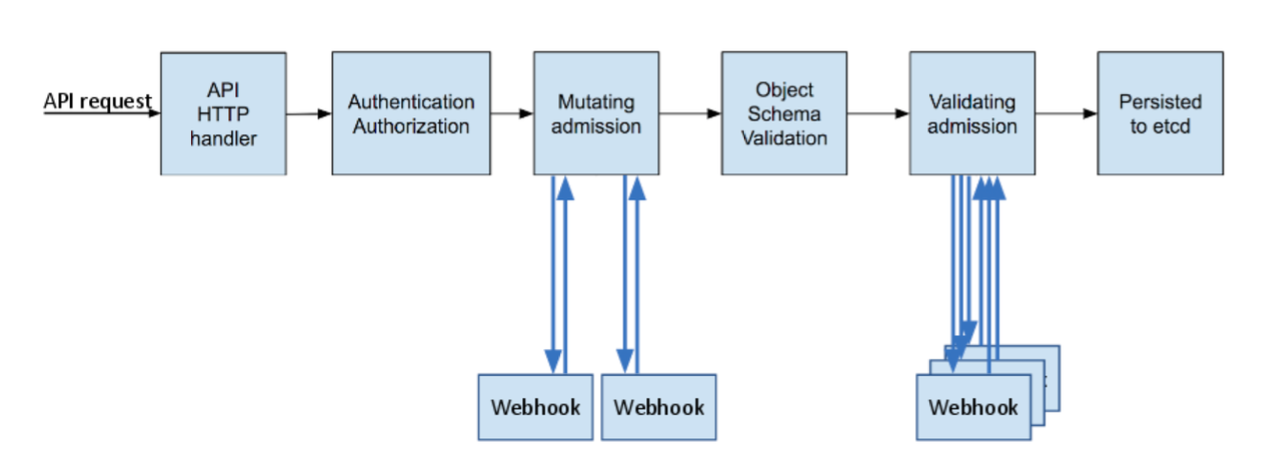

根據 Kubernetes blog A Guide to Kubernetes Admission Controllers[3],Kubernetes Admission Controllers 功能提供一種方式讓經驗證後的 API 請求修改 API 請求內的 object 或是拒絕請求的一道防衛門。Admission control 過程上可以分成兩階段,其細節流程讀如下所示:

答案:是

依照上述 audit log,其實我們就已經知道答案。在 annotations.mutation.webhook.admission.k8s.io 代表了 mutating webhook,而 round_0_index_0 代表了第一輪 mutation 中第一個 mutating webhook 並且成功 mutation[5]。而其他 mutating webhook 分別為:

我們可以透過 kubectl 命令查看對應 mutating webhook 資源,其中我並未手動建立 0500-amazon-eks-fargate-mutation.amazonaws.com mutating webhook configurations:

$ kubectl get mutatingwebhookconfigurations

NAME WEBHOOKS AGE

0500-amazon-eks-fargate-mutation.amazonaws.com 2 3h47m

pod-identity-webhook 1 6d14h

vpc-resource-mutating-webhook 1 6d14h

再次使用 CloudWatch Logs insight syntax,查看是誰幫忙建立部署此 mutating webhook 。

filter @logStream like /^kube-apiserver-audit/

| fields @timestamp, @message

| sort @timestamp asc

| filter objectRef.name == '0500-amazon-eks-fargate-mutation.amazonaws.com' AND objectRef.resource verb == 'create'

| limit 10000

由以下資訊,可以得知是由 EKS username eks:cluster-bootstrap 透過 kubectl 方式部署,比對時間與 Fargate Profile 建立時間相符。

userAgent: kubectl/v1.22.12 (linux/amd64) kubernetes/dade57b

user.username: eks:cluster-bootstrap

stageTimestamp:2022-09-20T20:09:59.446214Z

此流程圖出自於 AWS re:Invent 2020: Amazon EKS on AWS Fargate deep dive[6]

由上述 log 比對,我們可以知道流程為:

0500-amazon-eks-fargate-mutation.amazonaws.com mutating webhook。上述資訊透過 EKS 所提供 Logs 來驗證上游 Kubernetes 運作原理,倘若上述內文有所錯誤,隨時可以留言或是私訊我。